SH introduces MCP Support for AI Integrations

Alexander Stasiak

Nov 27, 2025・10 min read

Table of Content

1. What MCP Is and How It Standardizes Communication Between AI and Tools

Understanding MCP

Why It Matters

2. What Changes in Practice — Easier AI Connections to Data, APIs, and Tools

Before MCP vs. After MCP

Practical Use Cases

Compared with LangChain and Other Frameworks

Where LangFuse Fits In

3. Adding MCP to Client Applications — Cybersecurity Case Example

Challenge

Solution

Results

4. Enterprise Benefits — Security, Interoperability, and Compliance

Security

Interoperability and Modularity

Compliance Readiness

Observability and Operational Control

5. How We Support Our Clients

FAQ: Understanding MCP and AI Interoperability

What is the Model Context Protocol (MCP)?

How is MCP different from LangChain or LangFuse?

Can MCP be used with any AI model?

Is MCP production-ready?

Why should enterprises adopt MCP now?

Recommended Sources for MCP / AI Integration

Unlock Seamless AI Connectivity with the Model Context Protocol (MCP)

Transform how your AI systems interact with tools, APIs, and data.👇

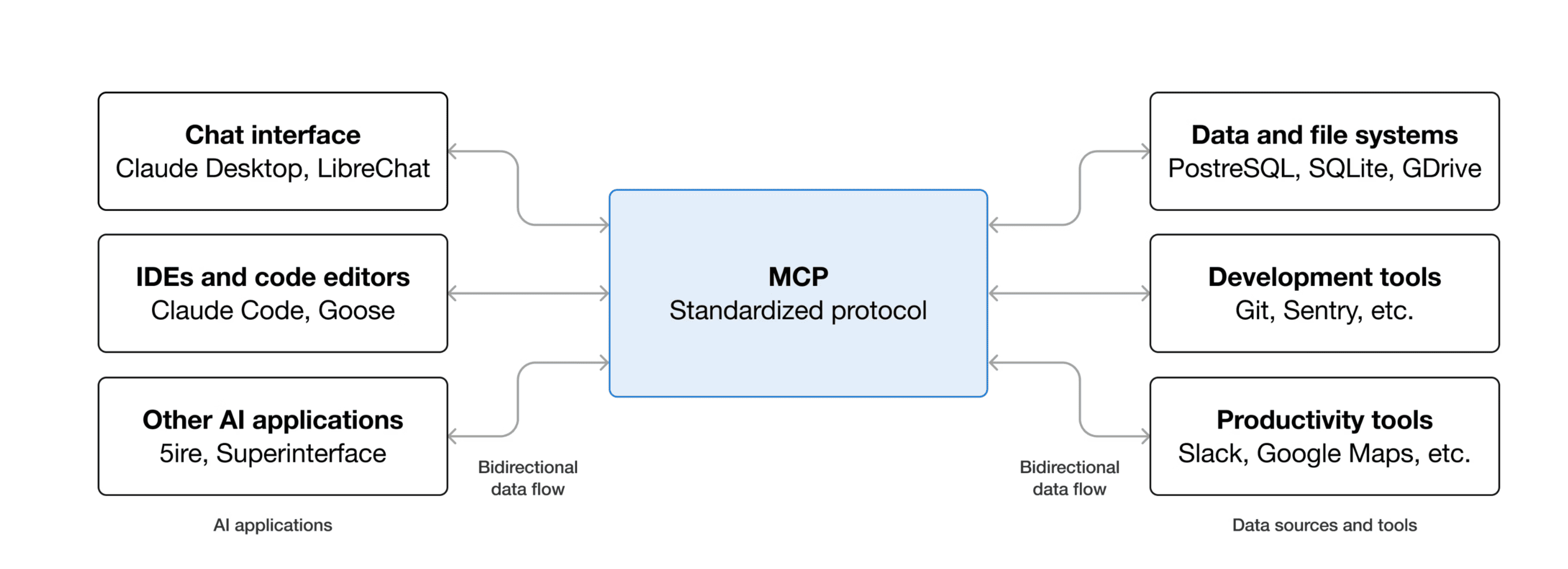

The Model Context Protocol (MCP) is emerging as the USB-C port for the AI world — a universal standard that lets intelligent systems seamlessly connect with tools, APIs, and data.

As an AI Software House and trusted Software Delivery Partner, we’re proud to bring full MCP support into our enterprise-grade AI solutions — enabling standardized, secure, and scalable connectivity across platforms.

1. What MCP Is and How It Standardizes Communication Between AI and Tools

Understanding MCP

The Model Context Protocol (MCP) is an open protocol that defines how AI models exchange context, actions, and results with external systems — whether that’s a database, CRM, ERP, or a security API.

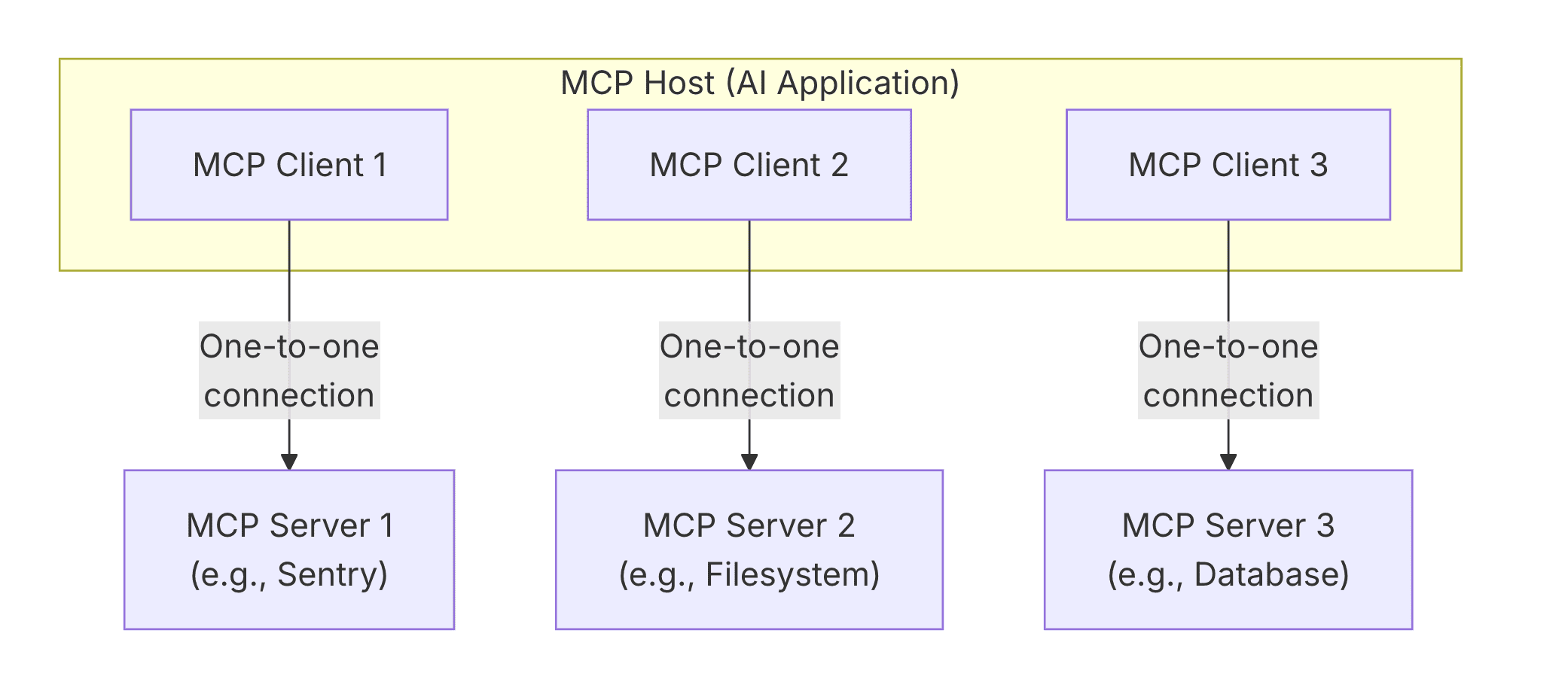

Traditionally, every integration required a unique connector or library (for example, through LangChain or custom middleware). MCP replaces all of this with a common communication layer — where the model “speaks MCP” and the system responds in kind.

Core layers of MCP include:

- Context Layer – defines the information exchanged (state, metadata, and intent).

- Action Layer – specifies how an AI agent can invoke operations (read, write, execute).

- Capability Schema – exposes what a given system can do (available operations, inputs, limits).

This standardization makes AI integrations more predictable, modular, and verifiable, creating a universal way for intelligent systems to interact with any enterprise application.

Why It Matters

- Reduced integration cost

Instead of building a new connector for each platform, MCP introduces a reusable protocol that abstracts the connection logic.

- Modularity and system independence

You can switch models or backend tools without rewriting adapters — the MCP interface remains the same.

- Declarative capabilities

Each tool declares what actions it supports and under which constraints, giving AI agents a transparent, machine-readable contract.

- Cross-tech interoperability

MCP is language-agnostic — it works across Python, Java, .NET, and Node.js environments.

For enterprise AI leaders, MCP isn’t just another framework — it’s an interoperability layer that future-proofs integrations and accelerates AI adoption across complex infrastructures.

2. What Changes in Practice — Easier AI Connections to Data, APIs, and Tools

Before MCP vs. After MCP

Before:

Each integration required its own logic. Your AI agent needed custom code to talk to Salesforce, another adapter for SAP, and yet another for your analytics layer. Each change triggered costly updates and regression testing.

After MCP:

All those systems simply expose MCP-compatible endpoints that describe available actions and data types.

Your AI agent automatically discovers them, connects, and executes tasks without needing hand-written code for each tool.

Practical Use Cases

- Helpdesk Automation

An AI assistant retrieves customer records from CRM, checks ticket history, and triggers responses — all via MCP. - Enterprise Analytics

Agents can dynamically query internal data lakes, fetch KPIs, and summarize findings in natural language. - Sales Process Automation

AI orchestrates multiple APIs (lead scoring, CRM updates, email notifications) through standardized MCP operations. - Customer Portals & Knowledge Bases

An AI assistant can navigate documentation, answer support questions, and reference internal policies using MCP connections to CMSs, document stores, and ticketing systems. - Community Platform integrated via MCP can let the AI surface context-relevant discussions, FAQs, and tutorials directly to users — reducing support load while keeping full data traceability.

Compared with LangChain and Other Frameworks

LangChain, LlamaIndex, and similar libraries focus on workflow orchestration and prompt management.

MCP operates at a lower, protocol level — defining how AI and tools communicate, not how they’re programmed.

That means:

- It’s framework-agnostic — usable with any AI stack.

- It reduces vendor lock-in.

- It supports true interoperability between multiple ecosystems.

In essence, MCP shifts the AI ecosystem from fragmented APIs to a unified, protocol-driven fabric.

Where LangFuse Fits In

LangFuse is a popular observability and analytics platform for AI applications — offering detailed logging, tracing, and evaluation of prompts, responses, and user interactions.

In fact, MCP and LangFuse can complement each other:

- MCP defines how the AI connects to systems.

- LangFuse tracks what happens during those interactions.

In practice, enterprises could use MCP for standardized connectivity and LangFuse for quality monitoring and continuous improvement — combining interoperability with insight.

3. Adding MCP to Client Applications — Cybersecurity Case Example

One of our key projects — a Cyber Risk Mitigation Platform — showcases how MCP transforms real-world enterprise environments.

🔗 View the case study

Challenge

The platform combines multiple components:

- AI modules for threat detection and risk scoring.

- Integrations with external threat intelligence APIs.

- Internal audit systems and compliance databases.

- Dashboards for cybersecurity and compliance teams.

Previously, each subsystem required its own adapter, making maintenance and upgrades difficult.

Solution

We implemented MCP Gateways as the bridge between AI agents and all external APIs.

- MCP Gateway Layer

Acts as a translator — converting MCP requests into standard API calls, handling authentication and permissions.

- Capability Schemas

Every API (e.g., threat intelligence provider) describes its operations — getIndicators, queryHistory, submitReport — in an MCP-compliant schema.

- Multi-Agent AI

The system uses multiple AI modules (for detection, scoring, and reporting), each able to access shared tools via MCP without custom adapters.

- Audit Logging

Every MCP call is recorded with context (agent, time, data scope), giving security teams traceability and accountability.

Results

- Faster feature rollout — new AI functions can connect instantly without integration overhead.

- Reduced maintenance — tool upgrades no longer break existing logic.

- Operational transparency — full traceability of every AI-triggered action.

- Improved compliance posture — auditable, standardized data access across systems.

This demonstrates how we, as an AI Software House and Software Delivery Partner, use MCP to deliver scalable, secure, and extensible architectures for enterprise AI systems.

https://www.ibm.com/think/topics/model-context-protocol

4. Enterprise Benefits — Security, Interoperability, and Compliance

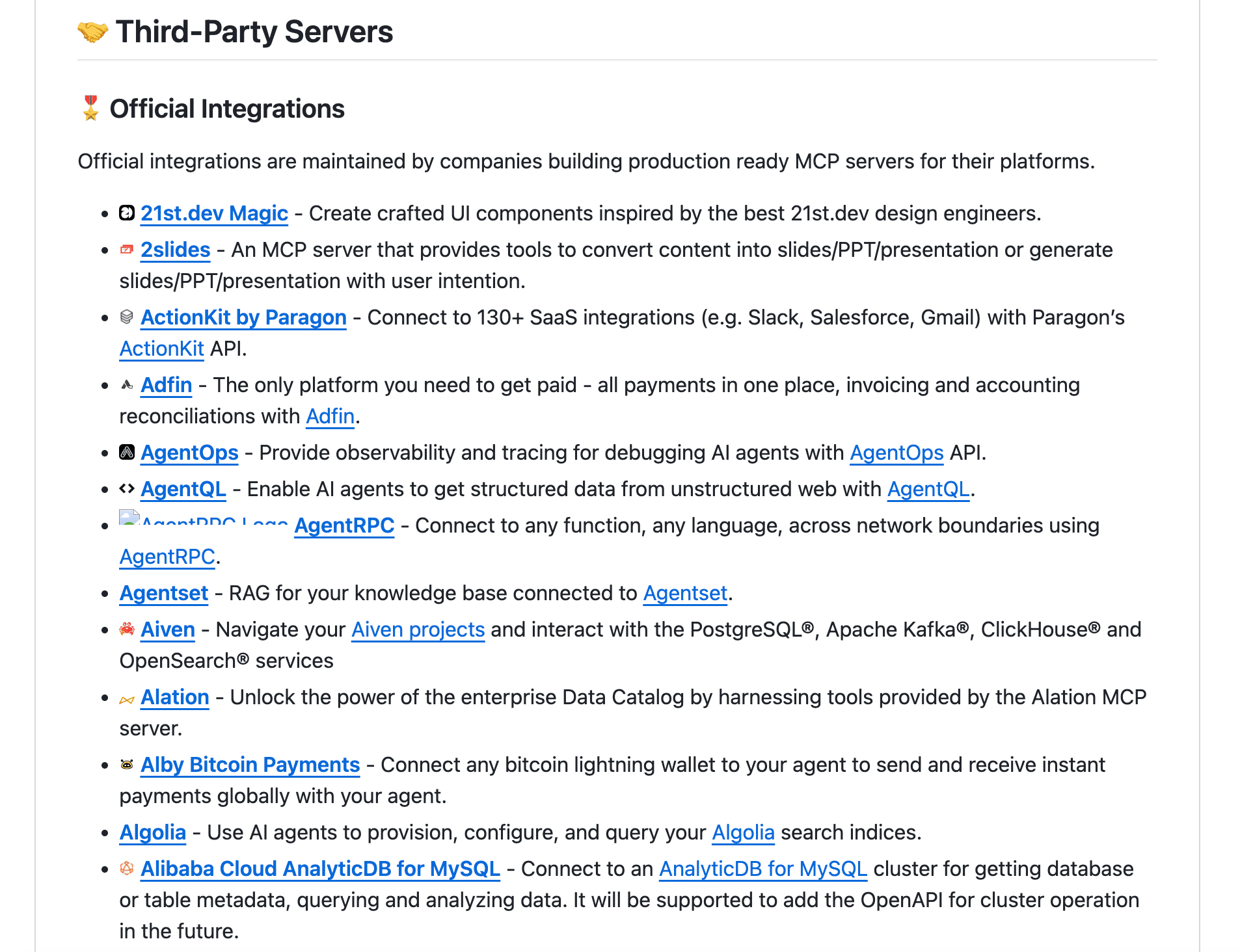

Example list of official MCP integrations

For enterprises, MCP brings measurable value in four main areas:

Security

- Controlled access — separates AI reasoning from data operations, minimizing risk of unauthorized calls.

- Fine-grained permissions — each tool can declare which operations and parameters are allowed.

- Auditable trails — every request and response can be logged for forensic or compliance purposes.

- Rate limiting and throttling — built-in mechanisms to prevent API overloads or misuse.

Interoperability and Modularity

- Unified communication across diverse tools — no matter the language or vendor.

- Modular architecture — add or replace components without re-engineering.

- Freedom from vendor lock-in — any MCP-compliant tool becomes plug-and-play.

Compliance Readiness

While we avoid specific frameworks, MCP naturally aligns with data security, AI governance, and privacy standards such as ISO 27001 or internal corporate controls.

Because actions are explicit, documented, and traceable, MCP simplifies:

- Access management,

- Risk monitoring,

- Explainability of AI decisions,

- Internal audit documentation.

This makes it easier to embed AI safely within regulated or risk-sensitive environments.

Observability and Operational Control

- Real-time dashboards tracking all MCP calls.

- Policy enforcement at the protocol layer (e.g., sensitive actions require confirmation).

- Versioning and rollback support for stable API evolution.

For CTOs and Heads of AI, this means turning complex AI ecosystems into manageable, transparent systems of record.

5. How We Support Our Clients

As your AI Software House and Software Delivery Partner, we provide:

- Architecture Audit – identify integration bottlenecks.

- Proof of Concept (PoC) – demonstrate MCP in your real environment.

- Integration Migration – transition legacy connectors into a unified protocol layer.

- Full-scale Deployment – production-ready MCP gateways with observability.

- Training & Enablement – technical workshops and SDK onboarding.

FAQ: Understanding MCP and AI Interoperability

What is the Model Context Protocol (MCP)?

MCP is an open protocol that standardizes how AI systems communicate with tools, APIs, and data sources. It defines a shared structure for exchanging actions, context, and results — similar to how HTTP standardizes web communication.

How is MCP different from LangChain or LangFuse?

LangChain is a framework for orchestrating prompts and workflows, while LangFuse is a monitoring and analytics platform.

MCP, in contrast, is a protocol — it focuses on the actual data exchange and interoperability layer. MCP can work alongside LangChain or LangFuse to provide both connectivity and observability.

Can MCP be used with any AI model?

Yes. MCP is model-agnostic. Whether you use OpenAI, Anthropic, Gemini, or custom LLMs — any model that implements the protocol can access MCP-enabled tools.

Is MCP production-ready?

Yes. Several AI vendors and open-source communities are actively adopting MCP as a universal interoperability standard. We’ve successfully deployed it in enterprise environments requiring security, traceability, and controlled data access.

Why should enterprises adopt MCP now?

Because AI ecosystems are becoming increasingly fragmented. MCP brings structure, governance, and consistency — essential for long-term scalability and compliance.

Recommended Sources for MCP / AI Integration

| Title | Why It’s Valuable | Key Highlights / Relevance |

| Introducing the Model Context Protocol — Anthropic | Primary / canonical source | Official announcement, design goals, SDKs, reasoning behind MCP. Anthropic |

| MCP Specification — modelcontextprotocol.io | Authoritative technical spec | The detailed specification and schema definitions. Model Context Protocol |

| What is MCP? — IBM | Reputable corporate explanation | Clear lay + technical explanation from IBM’s perspective. IBM |

Digital Transformation Strategy for Siemens Finance

Cloud-based platform for Siemens Financial Services in Poland

You may also like...

5 Easy Steps to an Effective Bug Bash

Bug bashes have become a popular practice among development teams to streamline bug discovery and improve product quality. This article explains what bug bashes are, their advantages, and when to use them. You'll find detailed steps on how to prepare for and run a successful bug bash, including defining roles, determining testing scope, creating bug report templates, and conducting the bug bash event. Discover the benefits of bug bashes beyond bug discovery, such as fostering teamwork and promoting a deeper understanding of the product development lifecycle.

Valeriia Oliinyk

Jun 02, 2020・6 min read

Flutter vs Kotlin vs Swift

Flutter, Kotlin, and Swift solve different mobile development problems. Here’s how to choose the right one for your product in 2026.

Alexander Stasiak

Dec 31, 2025・14 min read

OpenAI API Integration Partner in Poland – Unlock AI-Powered Innovation with Startup House

Harness the power of AI with Startup House – your trusted OpenAI API integration partner in Poland, delivering secure, compliant, and future-ready solutions.

Alexander Stasiak

Sep 17, 2025・10 min read

Let’s build your next digital product — faster, safer, smarter.

Book a free consultationWork with a team trusted by top-tier companies.